Digital images are defined by vast quantities of binary data. A modern digital SLR generates colour information for 10 or 20 megapixels every time the shutter is released, and the data for each pixel consists of up to 48 bits of binary information. Simple arithmetic reveals that 15 million pixels are defined by 720,000,000 bits of data - ie a string of ones and zeros filling approximately 250,000 sheets of A4 paper! Computers and microprocessors are so efficient at processing large volumes of data that it is easy to forget what they are doing for an average photographer.

Compression algorithms are used to reduce the amount of space required to store these huge amounts of data. The practical implementation of compression systems is far too complex to be explained here, but it is possible to indicate the principles involved. It should be emphasized from the outset that this article is intended to do nothing more than explore the basic principles. Anyone wishing to understand the detailed theoretical background should seek appropriate sources elsewhere.

There are two distinct types of compression algorithm - lossless and lossy. Lossless algorithms reduce the amount of data required to store an image in a manner that is completely reversible. When an image in decompressed, the information is returned to precisely its format prior to compression. The process can therefore be undertaken as many times as required without any loss of detail or quality in an image. Lossy compression is used to achieve a greater degree of compression but cannot be entirely reversed. When an image to which lossy compression has been applied is decompressed, some detail will be changed and the quality of the image will be somewhat degraded. The amount of degradation typically depends upon the extent to which an image has been compressed. Those subjected to extreme compression suffer the most. Also, remember that each time an image is saved using lossy compression, further degradation takes place. An image manipulated in this way therefore progressively decreases in quality. The JPEG, GIF and TIFF file formats all use compression.

Entropy coding is a form of compression in which frequently occurring patterns in data are represented by relatively little information, and rarely occurring formations are represented with a greater number of bits. Typically, such systems replace codes of equal length with codes whose length is proportional to the negative logarithm of the probability of their occurrence. The most common codes are consequently represented by the shortest codes. In a system known as run-length encoding, image elements are arranged in a zig-zag pattern to group similar frequencies. The basic principles of run-length coding, and a comparable system known as pointer coding, are relatively easy to understand. In practical applications, compression algorithms also make use of other coding systems such as Huffman and arithmetic coding.

Run-length encoding is very simple in principle. The list of binary digits defining an image is examined for instances of repeated digits. For example, look at the 48-bit binary string below:

111111111100000011110000000000111111111111000000

Since binary numbers use only two characters, it is almost inevitable that one character (either a "1" or a "0") will be repeated many times in some parts of a long sequence. In the example above it is possible to summarize the sequence as 10 x "1", 6 x "0", 4 x "1", 10 x "0", 12 x "1" and 6 x "0". It is therefore possible to rewrite the 48-bit number as:

1(10), 0(6), 1(4), 0(10), 1(12), 0(6)

Further, because we have a representation of "1" and "0", and we can know with which character the encoding process began, it is possible to rewrite the 48-bit number as:

10, 6, 4, 10, 12, 6

This process is completely reversible and hence a compression system using the principle would be described as lossless. Although the degree of compression in the case of this single 48-bit number is limited, when applied to an image represented by 720 million bits of data the saving would probably be enormous.

Another easy-to-understand principle is that of pointer coding which is particularly effective when applied to sparse data. Sparse data is a term used to describe a situation where the number of "1" characters vastly exceeds the number of "0" characters, or vice versa. For example, in the 48-bit number below there are only a few "1" characters.

000000001000000000110000000000001000000000000000

The sequence of characters above can therefore be written as 9, 19, 20, 33, the numbers representing the locations in the sequence where the character "1" occurs. This is generally known as the pointer representation of the "ones" in this example.

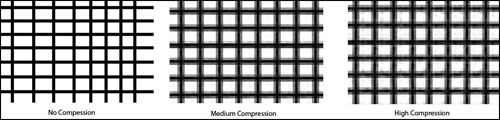

The JPEG compression algorithm, which is a lossy compression system in most forms, is widely used by photographers. Images compressed using the JPEG algorithm normally suffer some degradation, but the degree of loss of image quality depends not only on the extent of compression, but also the content of the image. As the degree of compression is increased, so degradation in the detailed high-frequency areas of an image become more apparent. Once a certain level of compression has been reached, an increasing number of artifacts become visible and the image eventually becomes unusable. The images below provides a simple illustration of how varying degrees of compression produce unwanted artifacts.In the left-hand image, the black grid lines remain clear, but in the centre image compression has produced countless artifacts which effectively widen and blur the lines. In the right-hand image, artifacts appear not only along the edges of the lines but also as blurred dots within the white squares.